Abstract:

A lot of the recent progress on many AI tasks were enabled in part by the availability of large quantities of labeled data for deep learning. Yet, humans are able to learn new concepts or tasks from as little as a handful of examples. Meta-learning has been a promising framework for addressing the problem of generalizing from small amounts of data, known as few-shot learning. In this talk, I’ll present an overview of the state of this research area. I’ll describe Meta-Dataset, a new benchmark we developed to push further the development of few-shot learning methods towards a more realistic multi-domain setting. Notably, I’ll present the Universal Representation Transformer (URT) layer, that meta-learns to leverage universal multi-domain features for few-shot classification by dynamically re-weighting and composing the most appropriate domain-specific representations. The URT layer allows us to reach state-of-the-art performance on Meta-Dataset. I’ll end by discussing my perspective on promising future directions.

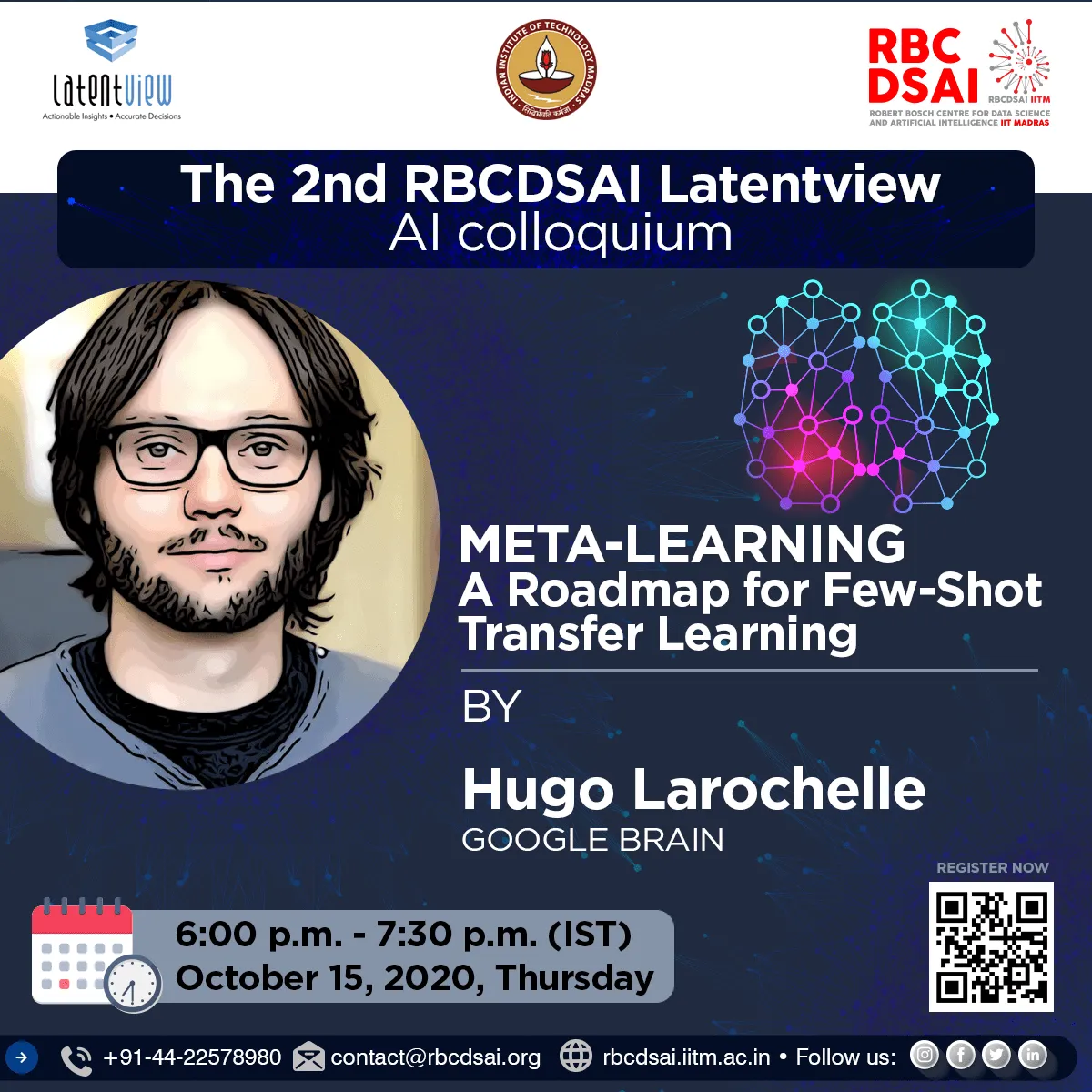

Bio:

Hugo Larochelle is a Research Scientist at Google Brain and lead of the Montreal Google Brain team. He is also a member of Yoshua Bengio’s Mila and an Adjunct Professor at the Université de Montréal. Previously, he was an Associate Professor at the University of Sherbrooke. Larochelle also co-founded Whetlab, which was acquired in 2015 by Twitter, where he then worked as a Research Scientist in the Twitter Cortex group. From 2009 to 2011, he was also a member of the machine learning group at the University of Toronto, as a postdoctoral fellow under the supervision of Geoffrey Hinton. He obtained his Ph.D. at the Université de Montréal, under the supervision of Yoshua Bengio. Finally, he has a popular online course on deep learning and neural networks, freely accessible on YouTube.

Click here for Video recording