Our current world is driven by machines. Our song choice, the probability that we will have a disease in future, forecast on the stock market or weather etc., the computer predicts it all -Thanks to a revolutionary technique called artificial intelligence. Over the years, artificial intelligence and machine learning have led to monumental growth in all the domains and is being used full throttle. Powered by artificial intelligence, self-driving vehicles are ready to hit the road and robots are ready to carry out our surgeries. As machine learning is set to change every aspect of our life, a key dilemma plagues the minds of researchers and its users- Can we trust machines to make key life decisions for us? Can we rely solely on the machine to drive us safely to the destination without knowing the basis of its function or shall we allow a machine to operate us instead of a doctor?

This mistrust on artificial intelligence is partly due to the way it functions. Artificial intelligence or machine learning works by finding out the relevant patterns in large amounts of data and classifying the forthcoming dataset based on the identified features. For example, a training dataset of chair and table photograph is provided to a computer model where it is mentioned to the model whether a photo has a chair or table, the model is expected to find patterns which are unique to chair or table. Once a model recognizes the features, it uses these features to tell the user whether a photograph provided by the user is of a chair or a table. The element of doubt comes as the features selected by the model are not revealed by the model and why the machine has selected a particular feature mostly remains hidden. This creates an element of doubt or suspicion in the minds of researchers and users about if the computer has made the correct prediction or decision. Working on this unpredictability, researchers are working to expose the hidden layers of machine learning to ensure that the computer is picking up the relevant features to make future projections.

“Neural networks have shown immense predictive performance in computer vision over the last few years. However, they act as an opaque black box and it is quite difficult to extract both nuggets of wisdom as well as indications of failure from such black box predictions,” says Dr Harish G. Ramaswamy, Assistant Professor at IIT Madras and also a member of Robert Bosch Centre for Data Science and Artificial Intelligence, while explaining the issue with neural networks- a technique of machine learning.

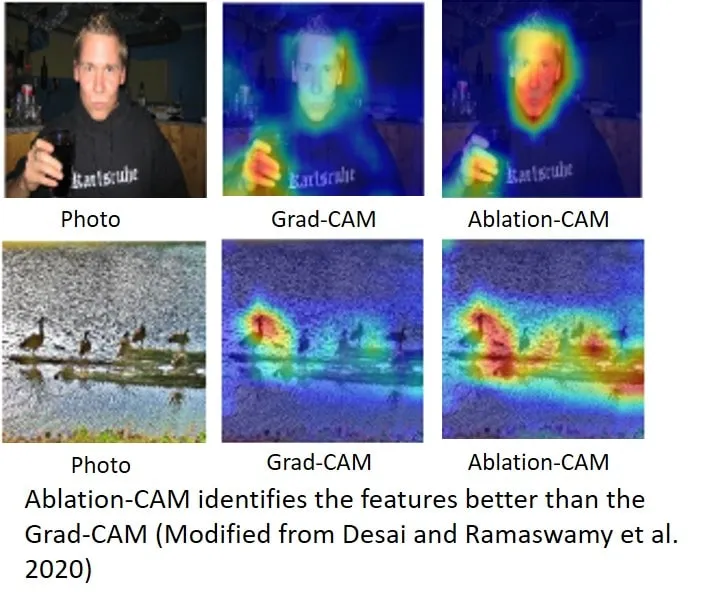

Dr. Ramaswamy and his team member Saurabh Desai have been figuring out ways to make the neural network, a technique of machine learning, more explainable. In their recent research, they have come up with a technique called Ablation-CAM which makes image recognition and processing tasks by AI more explainable and hence the results more trustworthy.

“With the improved performance of various image recognition models and their deployment in various practical situations, it becomes very important to open the deep learning black box and understand what the models are doing. Our previous work, Grad-CAM in collaboration with colleagues at Georgia Tech and Virginia Tech proposes a gradient-based technique for creating such visualizations and various experiments for evaluating the effectiveness of the explanations using human studies. Ablation-CAM is an interesting new work in this domain,” says Dr Ramakrishna Vedantum, Research Scientists at Facebook, who works in a similar area.

Image recognition through computer vision finds application in areas like face recognition, security, medical image analysis, wildlife conservation and many more areas. Google image search is the most commonly used AI-based image recognition tool. While the models behind these tools are adequately tested to accurately identify the object or person in the image, the characteristic features used by the model remain unknown. For example, the model may distinguish between a cat and a dog accurately but will not explain its user as to why it classified a cat as a cat or a dog as a dog.

“There have been several approaches that attack this shortcoming (opaque black box nature), the most popular of which is the gradCAM, and give a lens to view the decisions of the neural network. We address a major shortcoming of the gradCAM technique, in our AblationCAM approach, by providing a visualization technique that is robust to local irregularities and saturations in the neural network. We demonstrate the effects of this on several practical metrics,” adds Dr. Ramaswamy.

The new technique by researchers, Ablation CAM, basically works by deleting the specific features in the image and then checking if the image is still recognized as what it was recognized earlier, if the recognition remains the same then it is concluded that the deleted or ablated portion is not a characteristic feature used for recognition. As Ablation-CAM carries out a series of such ablations on all the portions of an image, the characteristic feature used by the model to classify an object gets unveiled to the researcher.

“Unlike Grad-CAM which looks at the gradient of the loss function with respect to the input datapoint, Ablation-CAM ablates or drops certain features as a heuristic to generate such visualizations. Overall, the results appear promising and the approach seems to outperform Grad-CAM over a number of relevant, and interesting experiments,” adds Dr. Vedantam.

After the encouraging results of their tool-Ablation-CAM, the team plans to test the validity of the Ablation-CAM approach to tasks other than image classification, such as caption generation, question answering etc. They intend to extend the insight to architectures to other models of machine learning such as recurrent neural networks and transformers to expand the scope of their newly developed tool.

Link to the article: Ablation-CAM paper

Keywords

neural network, black box, deep learning, visualization