If we have to drive from one place to another, we first think about the major route we will undertake and then while driving on the same route we worry about the small turns we need to take, the speed limit we need to follow etc. These two levels of thinking, i.e. high-level planning and low-level execution is hierarchical thinking. As humans we think in a hierarchical manner- we first figure out the major outlines and then work on the nitty-gritty of a task or process. Efforts are on to incorporate this hierarchical level of thinking and execution abilities in machines as well.

A research team comprising researchers from RBCDSAI-IIT Madras, the University of Texas and Oregon State University has come up with an architecture named “RePReL” that combines an offline planner with an online reinforcement learning algorithm. Combining these two enable the exploitation of the complementary strengths of these two approaches. Researchers have published this new architecture in the journal Neural Computing and Applications.

“Our work (RePReL) presents a framework that integrates classical Planning with Deep reinforcement learning to plan and act in open worlds. It can be used with any existing off-policy reinforcement learning algorithms and any planner for sequential decision-making in relational domains,” says Prof. Sriraam Natarajan, a Professor at the University of Texas, who is one of the authors of the study.

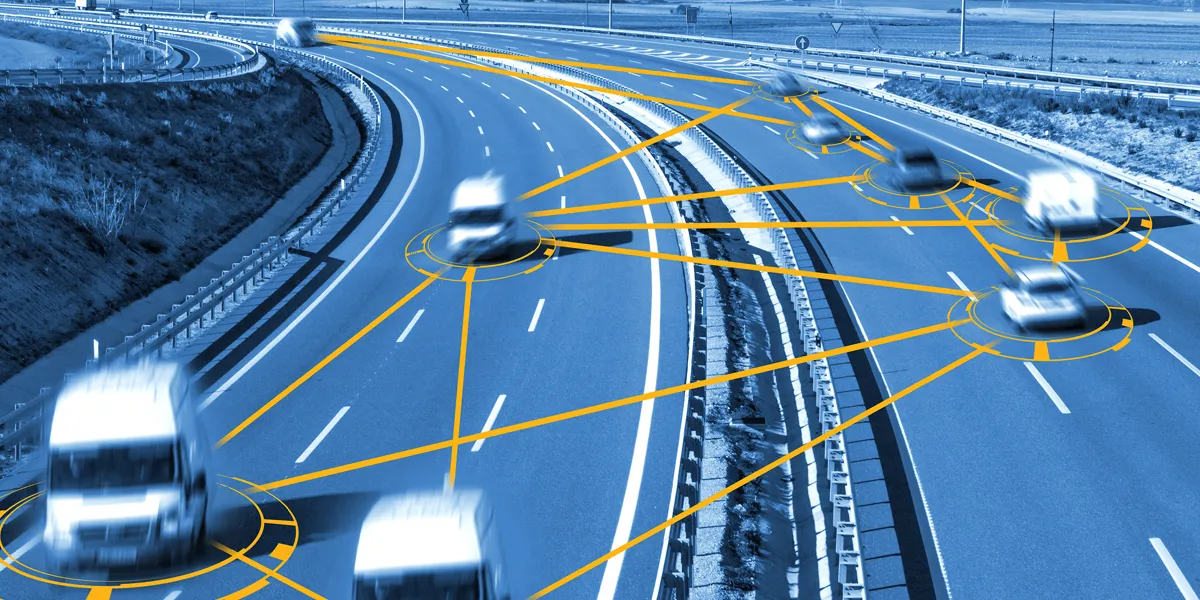

In the context of driving, a reinforcement learning agent can decide on whether it needs to accelerate, deaccelerate, apply brakes or move the steering of the vehicle. The agent makes this decision based on two broad rewards, i.e. safe driving and reaching the destination earlier, that it will receive by performing an action. While this dynamic reinforcement approach is necessary to know the locations of other cars and traffic lights etc, an exercise like planning the route doesn’t need to be dynamic and can be done in an offline manner.

The team, therefore, combined an offline planner designed by a human expert with a reinforcement learning algorithm to make this new architecture. The human expert defines the task-subtask hierarchy in the planner and the planner provides a sequence of high-level tasks for solving the problem. The reinforcement learning agent, on the other hand, takes care of low-level dynamic tasks like the distance between the cars, traffic lights etc. They have found that this new architecture is more efficient and effective in performing related tasks.

“What is technically novel and interesting about this work is that it brings together ideas in expressive knowledge representation, automated planning, and hierarchical reinforcement learning in an elegant unified framework,” says Prof. Prasad Tadepalli, a professor at the Oregon State University, who is one of the authors of the study.

Apart from driving, this new architecture has applications in robotics as well. A robot can also section its tasks into higher-level tasks and low-level tasks. For example: A robot directed to clean the house can be fed with instructions on the sequence in which it needs to clean various house areas in an offline manner i.e. planner and RL agent can make more dynamic decisions on how long to vacuum a room based on the amount of cleaning it requires.

“The current framework assumes that given the high-level plan there exists a low-level plan that can achieve the goal (formally called a downward refinement assumption). We plan to include communication between the low-level agents and the high-level planner to relax this assumption,” says Harsha Kokel, lead author of the study, citing the current limitation of architecture and the future plan.

Article

(https://link.springer.com/article/10.1007/s00521-022-08119-y)