Attention

publications

On the weak link between importance and prunability of attention heads

http://dx.doi.org/10.18653/v1/2020.emnlp-main.260

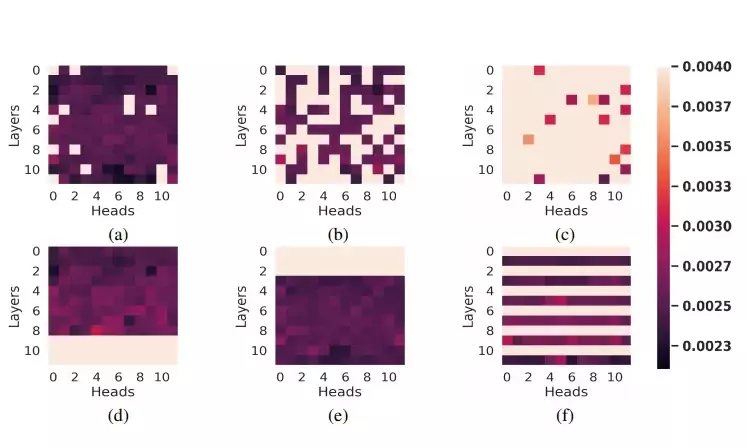

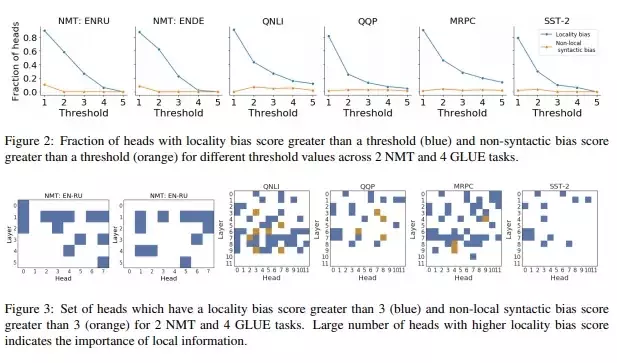

On the Importance of Local Information in Transformer Based Models

https://arxiv.org/pdf/2008.05828.pdf

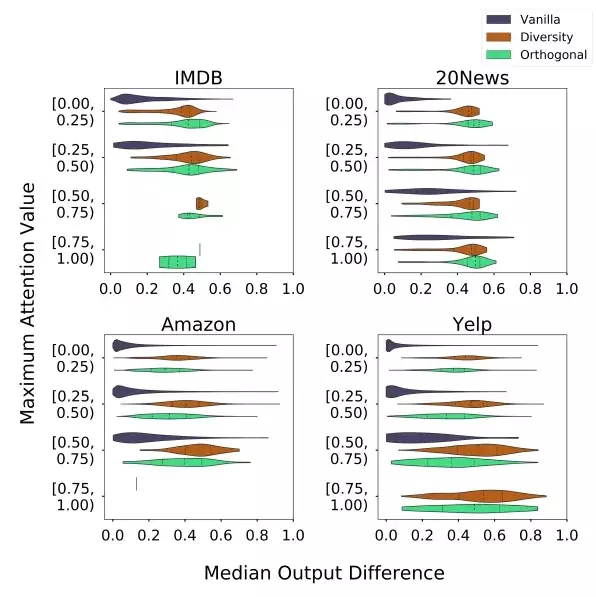

Towards Transparent and Explainable Attention Models

https://doi.org/10.18653/v1/2020.acl-main.387