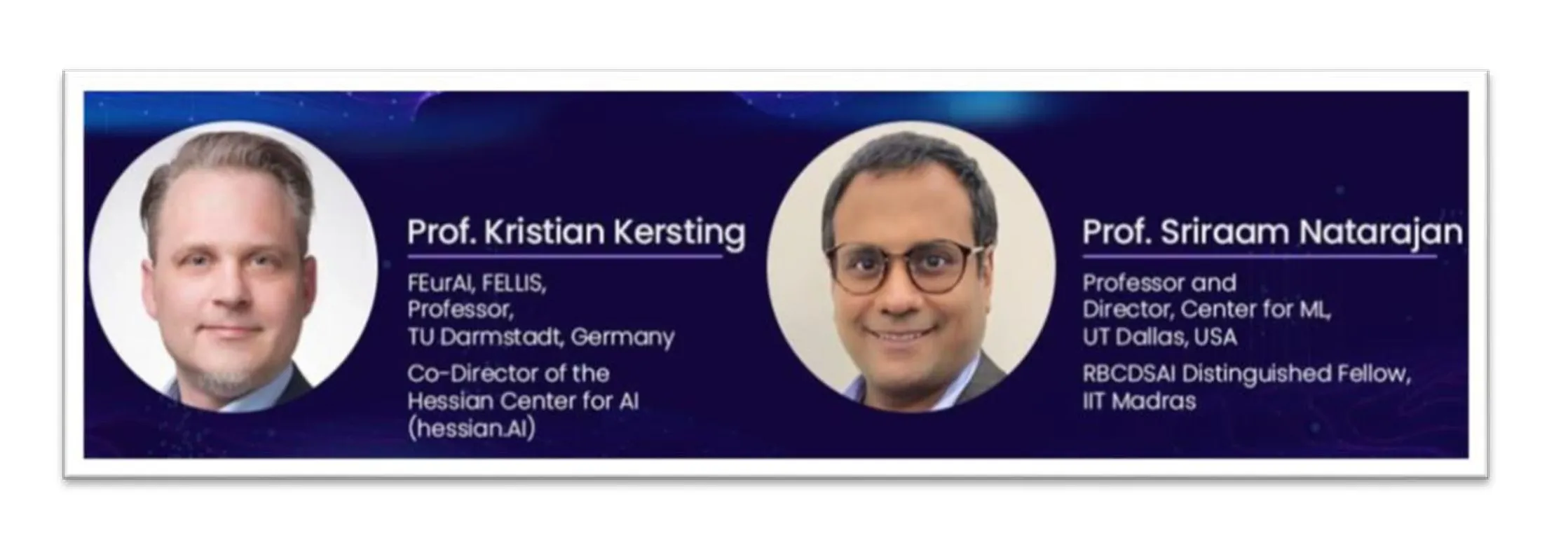

Sparks flew when friends, Prof. Sriraam Natarajan, UT Dallas and Prof. Kristian Kersting, TU Darmstadt decided to get together over a cup of coffee and discuss on the topic “Symbolic or Deep Learning? Promising directions of AI”. The talk started with setting up the stage with “AI is changing the world and has a huge impact in economics, drug development, agriculture etc.” Kristian believes that AI should be an engine for innovation. People should follow and listen to computer scientists more on leveraging AI techniques to make social impact, solve business and real-world challenges. Kristian emphasized more on the importance of Deep learning towards the progress of AI. He stated some excellent DL applications in vision and language understanding. However, Kristian seemed skeptical about the definition of “AI” perceived by humans. He asked Prof. Sriraam to define AI.

Sriraam traced the roots of AI to the 16th century which reflected his inclination towards symbolic representation. They used symbolic representations to capture the essence of the mind. In Sriraam’s own words, “whether we are interested in acting optimally and thinking optimally, or acting rationally and thinking rationally.” According to Sriraam, the key factors that make up an intelligent system is perceiving information and taking action automatically based on underlying perception. Kristian confronts Sriraam’s point of view of not having a clear AI definition. He went back to John McCarthy’s definition of AI, “The science and engineering of making intelligent machines, especially intelligent computer programs. It is related to using computers to understand human intelligence, but AI does not have to confine itself to biologically observable methods.”

According to Kristian, the performance of AI should be compared with ordinary people and not cherry-picked intelligent people. Kristian’s interest is in pushing AI further by working on Deep learning advancement. Learning is a big part of AI, but Sriraam thinks AI is much bigger than just learning. It is about reasoning, trustworthiness and understanding current limitations. Kristian went to the history of deep learning and highlighted landmarks to show DL’s relevance in AI. He spoke about neural networks, followed by an AI winter, and then a trend of logic and symbolic systems, again followed by an AI winter. He believes that there won’t be any AI winter after current Deep learning systems. Kristian asked a question to Sriraam about his faith in symbolic AI and elaborated more on his beliefs. Sriraam stated the definition of Symbols by Allen Newell and Herbet Simon: “A physical symbol system has the necessary and sufficient means of general intelligent action.” He strongly asserts that people think with symbols. He also gave another perspective of logic and symbols in terms of Causality.

He highlights Judea Pearl’s quote, “Humans are smarter than data. Data do not understand causes and effects; humans do.” Here Kristian indicates the caveat of casual models, which is intractability and again tries to prove the superiority of deep learning models because they are tractable models. Deep learning has a powerful ability to learn on differentiation principle and gradients of data. If we move to symbolic representation, we lose agile training techniques, which are robust and conventional. In both approaches, communication and objects are difficult things to identify. Both speakers try to convey that it’s possible in respective methods by giving examples of symbolic systems and deep learning systems.

The friendly debate settled down on the agreement that both, differential neural systems and symbolical planning are promising for actual AI. Neuro-symbolic reasoning combines deep learning and symbolic planning, which can help achieve association, intervention and counterfactual reasoning. Both speakers agree that this combination is the future of AI and advises the general audience and researchers to read and work in both domains. Kristian emphasizes the importance of AI alignment: What should we ask AI to do, think and value? that indicates AI systems’ moral values, biases, and trustworthiness. In the end, speakers define the third wave of AI as follows, AI systems that can acquire human-like communication and reasoning capabilities, with the ability to recognise new situations and adapt to them. There is still a lot to be done, and they encouraged the audience to join them and make AI a team sport.

The video is available on our youtube channel: Link